What is Airflow

Apache Airflow is a popular open-source orchestrating tools for authoring, scheduling and monitoring data pipeline/workflow.

It has some modern data tech stack features such as

It is a workflow as a code that allows you to manage your workflow with proper version control.

horizontally scalable

Compare with Cron

don't get me wrong. I love cron! But compared with cron, the advantage of airflow is summarized in the table below

| Feature | Cron | Airflow |

| User Interface | Limited, often command line-based | Powerful web-based UI for managing workflows |

| Scalability | Limited, runs on a single machine | Horizontally scalable, can distribute tasks across multiple worker nodes |

| Task Management | Limited, basic task scheduling | Sophisticated task scheduling with dependency management |

| Resources | Low resource requirements | High resource requirements |

Enough of introduction, let's dive deep into the details

Airflow Main Components

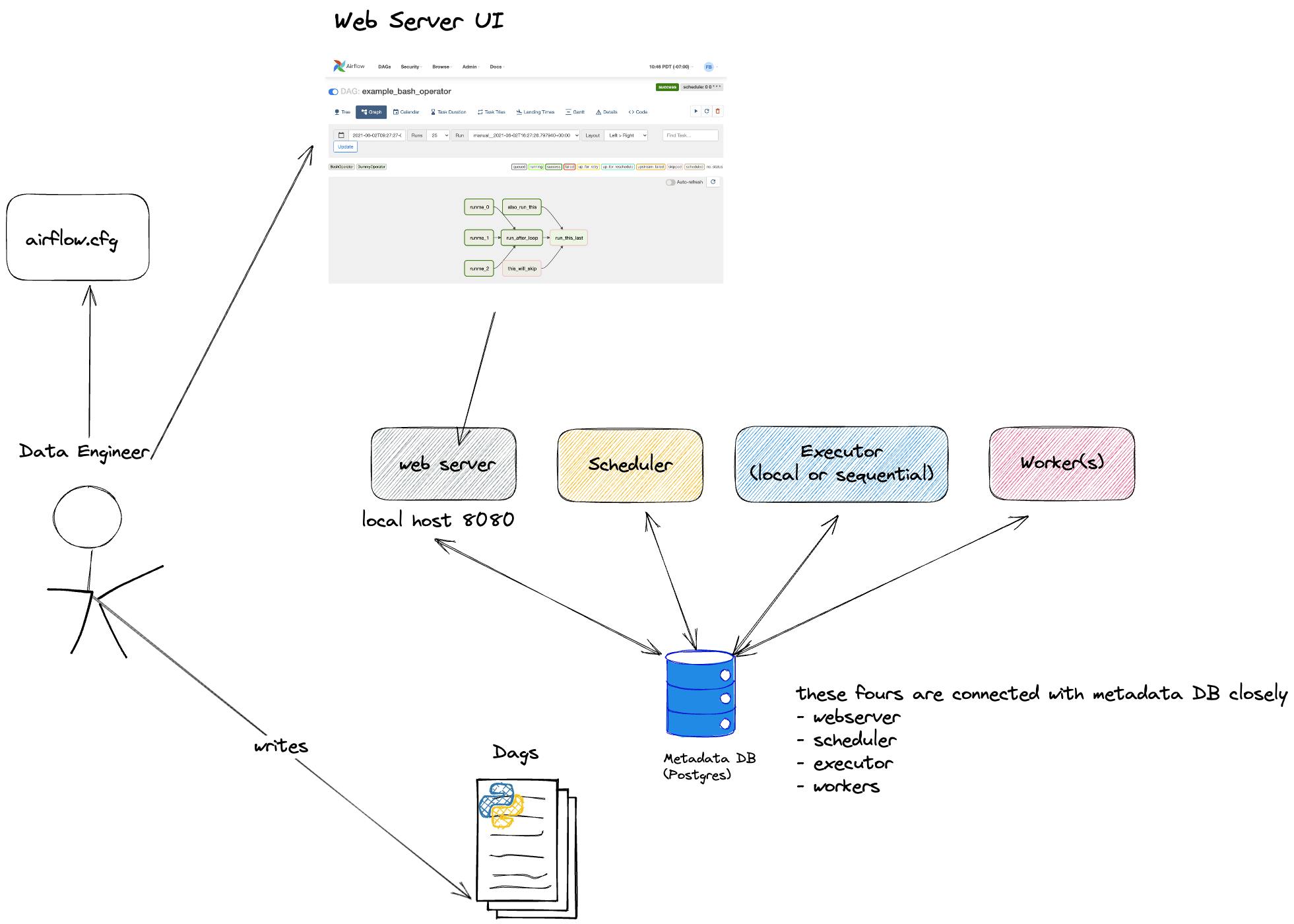

The overall architecture of how a data engineer interacts with airflow is illustrated in the diagram below

You, as a data engineer, write DAGs in python (will be covered later, think of it as code for now) to write up you workflow and you can interact via cli or web server UI to author and schedule your workflow.

It has five main components shown in the figure below

| Component | Description |

| Webserver | Provides a user interface for data engineer to monitor and control workflows. |

| Scheduler | Triggers tasks and manages dependencies between tasks. dependencies are just a fancy word for relationship and execution order in this context. |

| Executor | Executes tasks on worker nodes and takes care of task distribution, resource allocation, and task queues. |

| Worker | Executes tasks assigned to it by the executor and communicates with the executor to receive tasks and report status. |

| Metadata Database | Stores information about workflows, tasks, and their dependencies. By default, airflow uses Postgres (tuple indexing for the win)! |

What is a DAG?

Directed acyclic graph (DAG) is a concept in graph the data structure. In this section, let's understand

what is graph?

how does graph relate to airflow?

what is DAG in airflow?

Graph concept in airflow

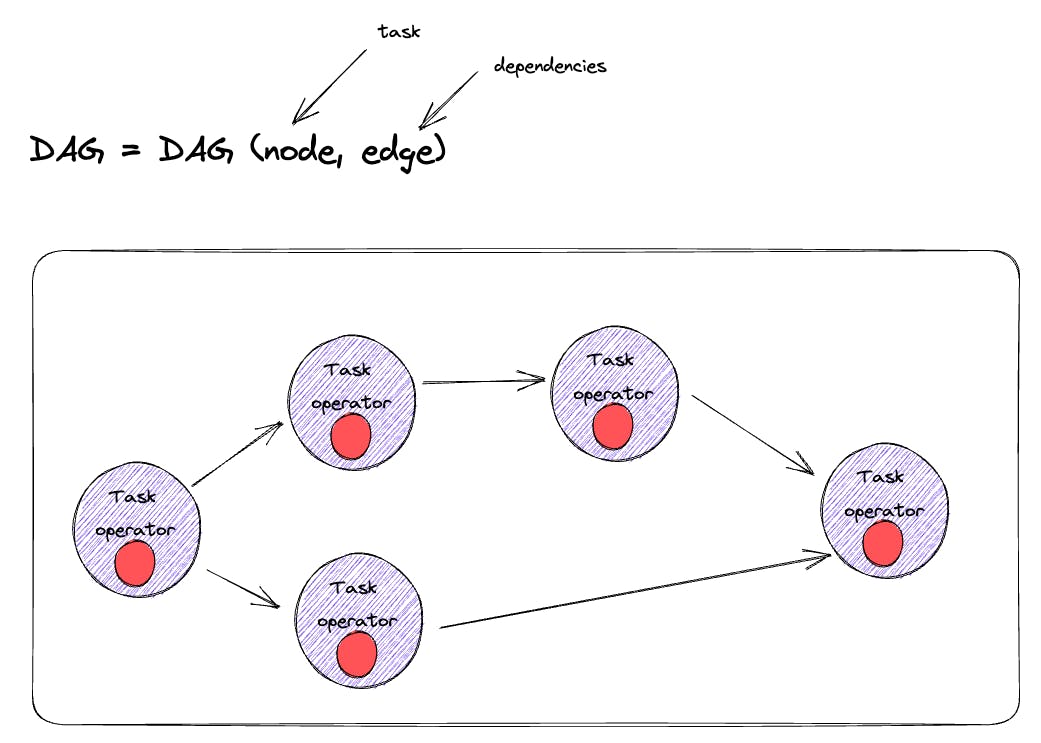

To describe an arbitrary graph, you would use node and edge. It is described mathmatically shown below

$$G=\left(V,E\right)$$

where G the graph, V the vertices, E the edge.

In the context of airflow, the concept of node and edge is implemented with task and dependencies.

$$\begin{align} node &= task\\ edge &= dependencies \end{align}$$

DAG is a special form of graph that the idea is prevalent in modern data tech stack such as airflow, pyspark and dbt (lineage graph). DAG means that it's a graph consisting of edge and vertice and the edge is pointing from one vertice to another while any loop in it.

DAG is extremely useful to describe workflow and a real world use case is illustrated in the figure below

DAG concept in airflow

As we discussed earlier, each node is a task and each edge is dependencies in airflow as illustrated in the figure below.

Each task is fulfilled by one operator such as BashOperator to perform bash operation, PythonOperator to run Python function. It looks something like this

default_args = {

'owner': 'adamzhang',

'retries': 5,

'retry_dealy':timedelta(minutes=2)

}

with DAG (

dag_id= "our_first_dag_v3",

default_args = default_args,

description= "this is our first dag we write",

start_date = datetime(2023,5,16),

schedule_interval = '@daily'

) as dag:

# set up our first node

task1 = BashOperator(

task_id = 'first_task',

bash_command = "echo hello dag"

)

task2 = BashOperator(

task_id = 'second_task',

bash_command = "sleep 5"

)

task3 = BashOperator(

task_id = 'third_task',

bash_command = "date"

)

# set up dependency

# task1.set_downstream(task2)

# task2.set_downstream(task3)

# with bitshift operator

task1 >> task2

task2 >> task3

After the code snippet above, you can see that:

each task has been defined with BashOpearator

dependencies have been defined with the bitshift operator

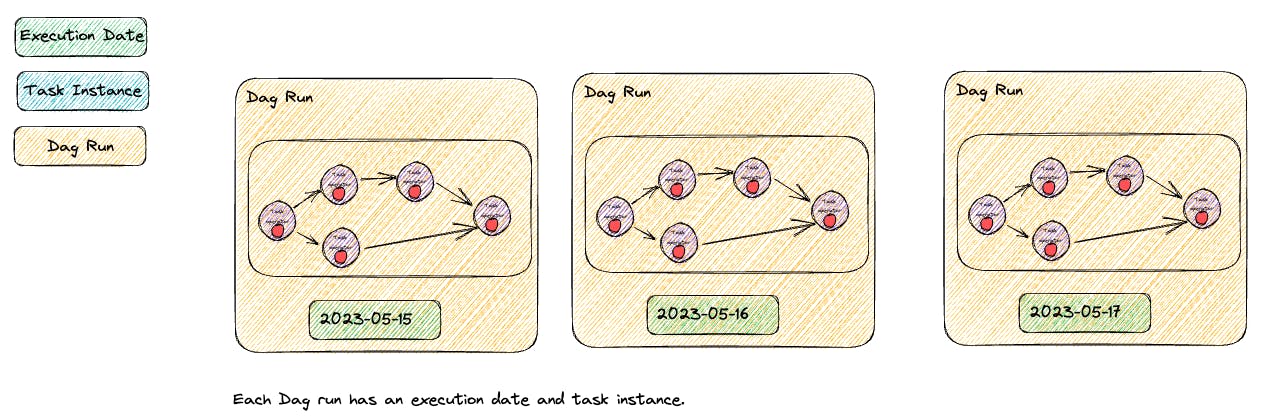

Let's say you wish to run your DAG daily, each dag run has an execution date and task instance illustrated in the figure below.

The DAG code you have written is a blueprint (concept of class), and on each scheduled execution date, an instance of the DAG will be spun up and running.

Summary

In this article, we covered

what's airflow and how it differs from cron

what are the main components of Airflow

DAG concept in the context of airflow

what are task, dependencies, dag run, execution date and task instance.